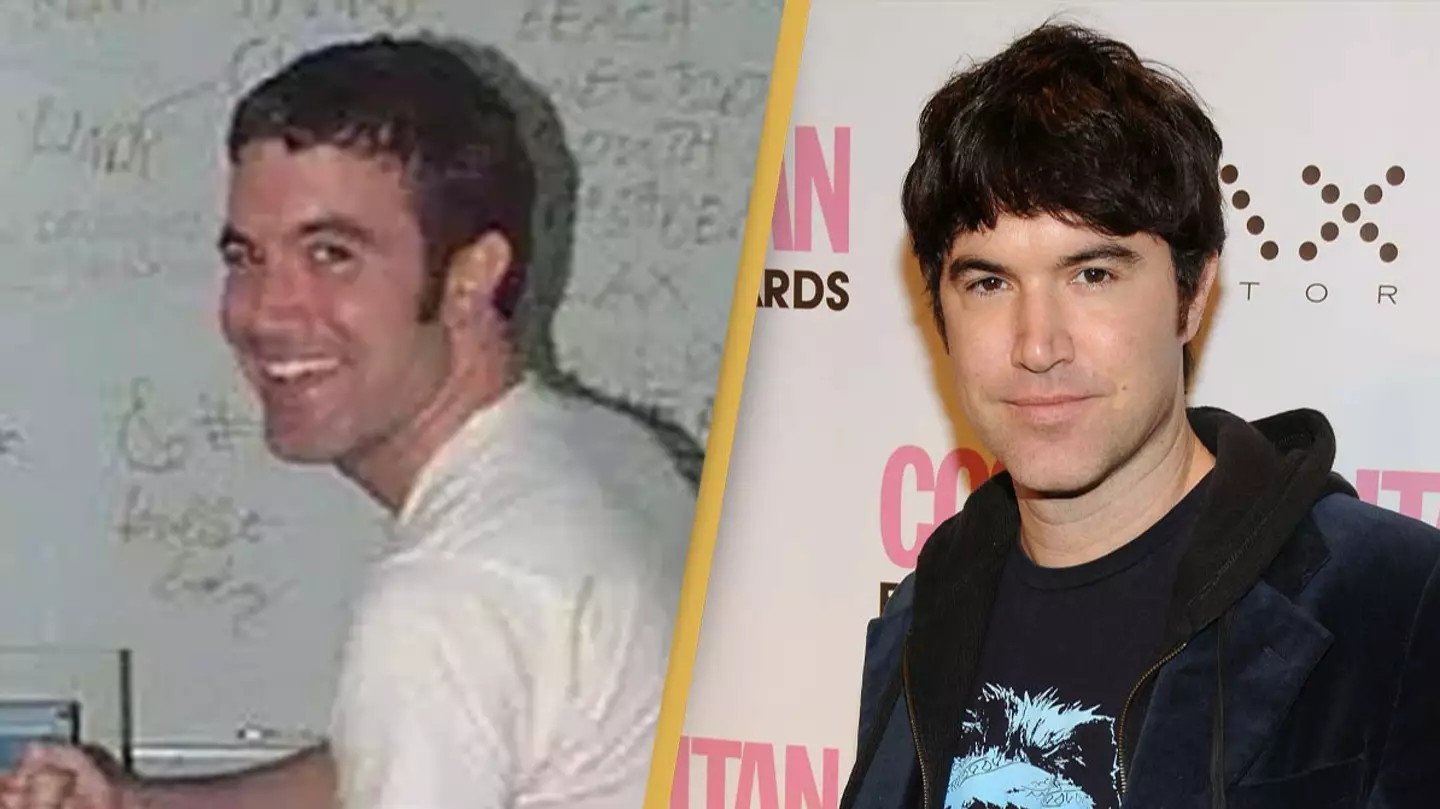

People reminiscing about Myspace are wondering what happened to Tom

The reason why no human has been back to the moon in the last 50 years might surprise you...

She took to Facebook to share a video of the ‘flying cylinder’

That's one way to do that, I suppose...

Stewart Butterfield's teenage daughter has been missing since Sunday (April 21)

The harrowing video shows how 144 people lost their lives in the horrific crash

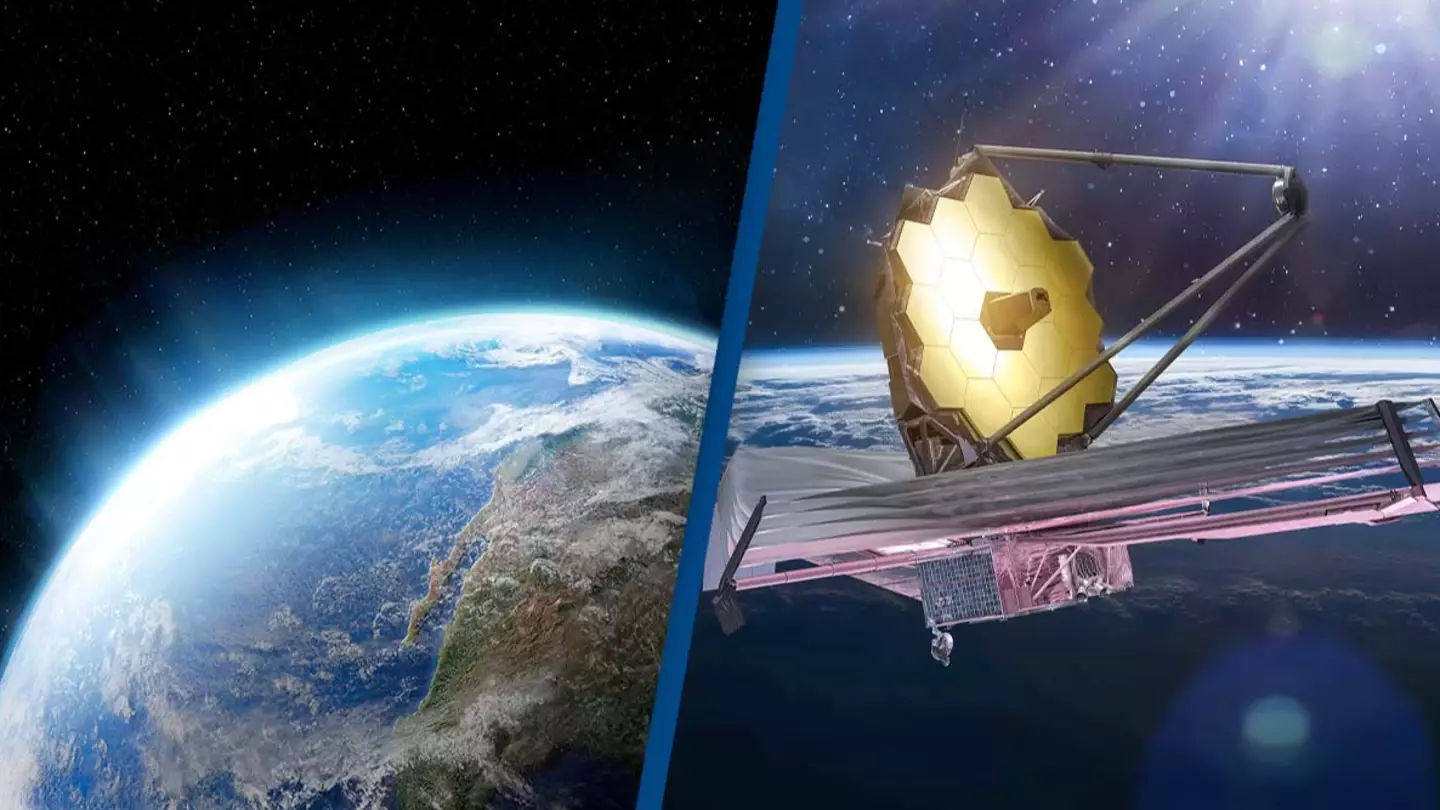

While many fear the short-term effects of climate change on our planet, the long-term effects will be even more devastating

US President Joe Biden started the clock on TikTok's potential ban after the bill passed in the Senate

-2.webp)

The cosmonaut was on an important space mission when he fell from space

The photo helped save Christopher Precopia after he was wrongly accused of a brutal crime

"It’s so fake that, no other country tried it again"

.webp)

If the asteroid, named Bennu, hit our planet it would cause huge destruction and there's a massive project underway to try and prevent it

There are several easy ways to make your iPhone battery life last longer

NASA's Voyager 1 stopped sending readable data in November 2023

A new study has suggested that Uranus may not be made of what we thought

The Tesla Cybertruck ended up looking like a smashed Lego toy

The nova explosion only happens around every 80 years or so

The footage saw the three astronauts enter Apollo 1 for the test

He says Tesla explained to him it was a 'known issue' with the Cybertruck

Scientists discovery of an X-ray technique that will 'transform the world'

Kim's Instagram followers raised questions about a particular aspect of the ad as she promoted the new Skims app

A recent filing claims the fault has 'increased the risk' of a potential crash.

The Netflix true crime documentary took the world by storm, but people are now noticing something unusual about photos used